Socially Assistive Robotics

I spent 2 months in the summer of 2016 interning at the Interaction Lab at USC, working on Socially Assistive Robotics (SAR). The mission was to design and implement robots that could form a relationship and encourage social, cognitive and emotional growth in children including those with social deficits. I worked on creating robust and easy-to-use infrastructure for conducting autonomous long term in-home studies of how children and their parents interact with the robot and its interface in order to understand what increases engagement.

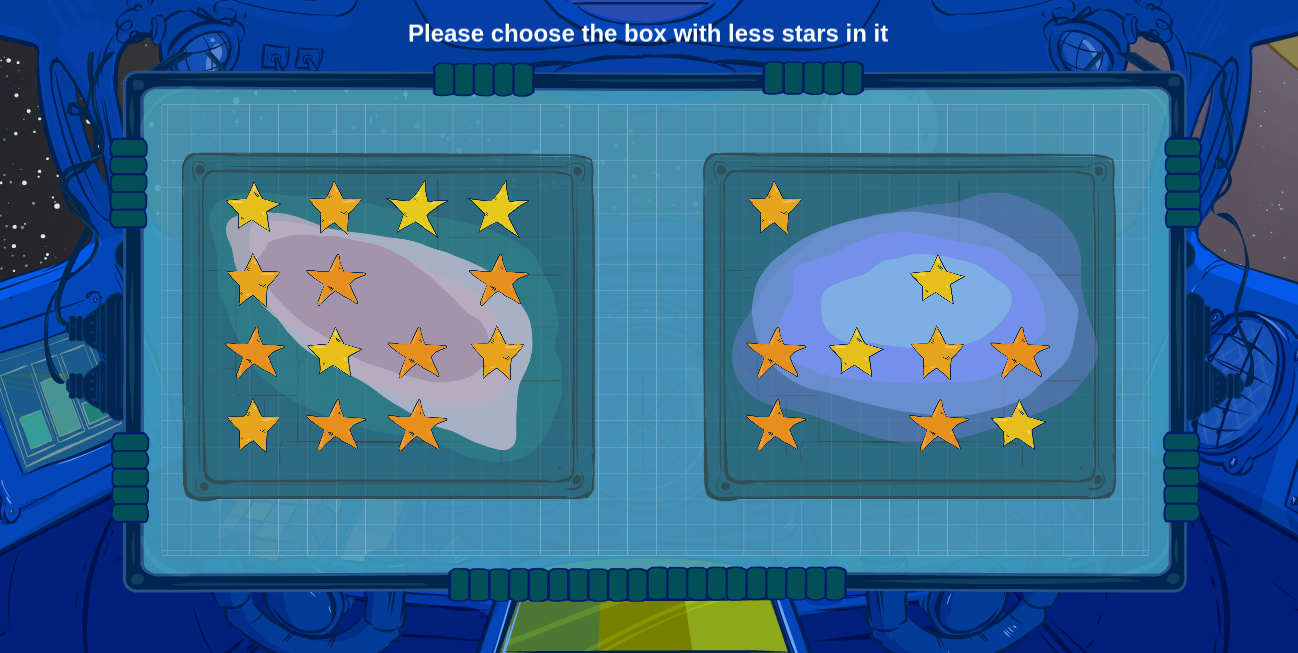

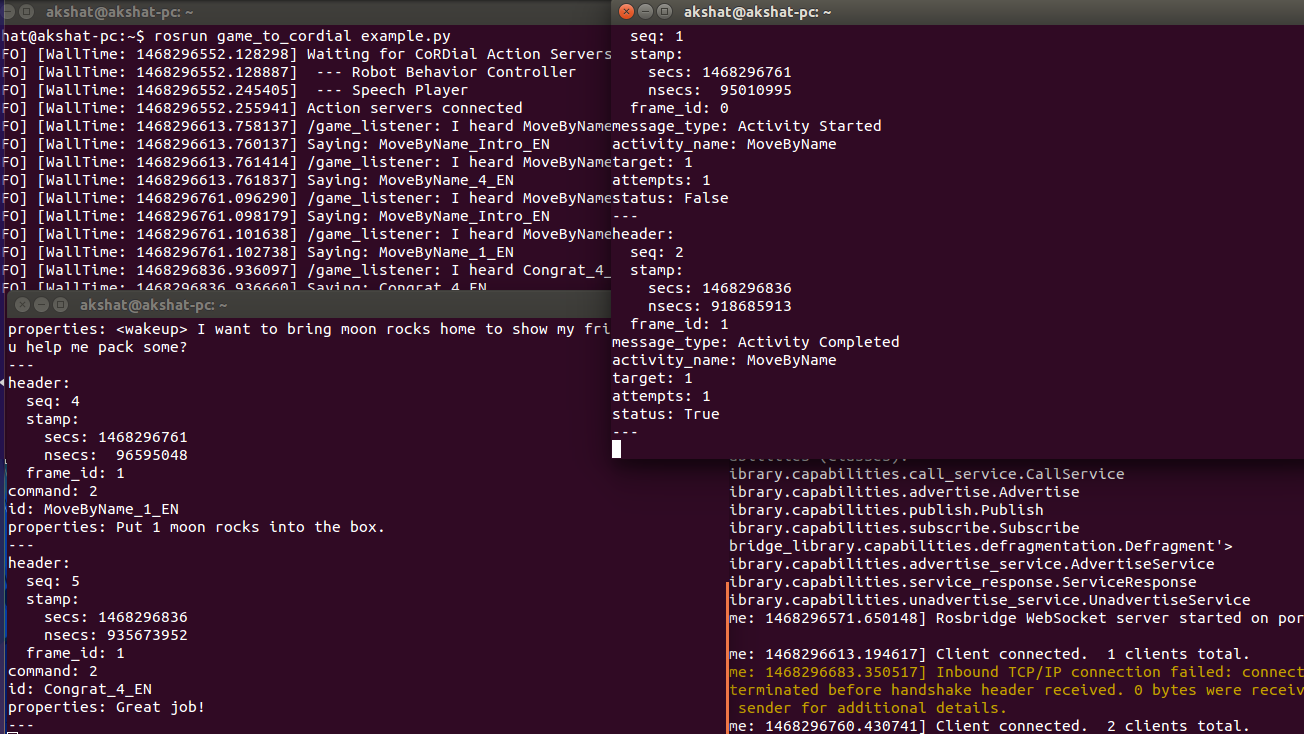

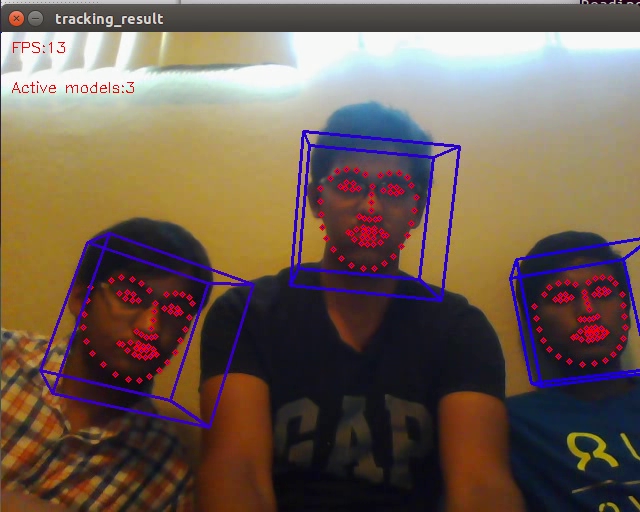

I developed a number concepts game in JavaScript and defined its’ interactions with the MIT DragonBot which acts as a knowledgeable peer to the child, giving auditory and visual response based on the child’s attempts on the game. I also developed ROS wrappers for open source facial recognition and analysis libraries to enable the robot to use that information in real-time. Further, I containerized the entire software stack to ensure easy installation and portability. This work was done for the NSF Expeditions in Computing Grant for Socially Assistive Robotics

A poster summarizing my work can be accessed here: [Poster]